Stacking

TipStacking a planetary image⚓

Siril provide an option to automatically align RGB channels at the end of the stack.

ScreenStacking⚓

Sum stacking

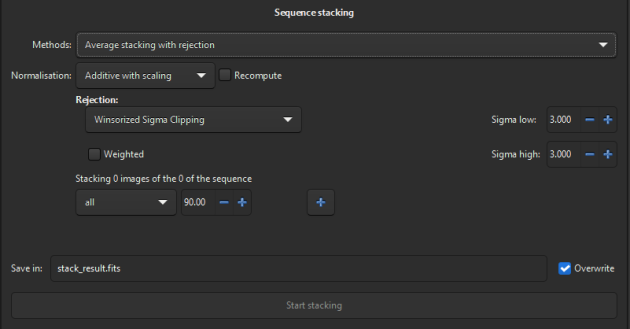

Average stacking with rejection

Median stacking

Pixel maximum stacking

Pixel minimum stacking

Extra⚓

Sum stacking is a simple method, which takes all images and return the mean value for each pixel. It is fast and frequently used for very large stacks (e.g. lucky imaging) where the total number of images will act as a means to remove outlier values as their contribution to the stack should remain small. It is however not very robust and methods with rejection should be used whenever possible.

Average stacking with rejection is the most common method to be used for Deep-Sky Imaging. The images are generally normalized beforehand and then, the values at each pixel location, called a pixel stack later in this section, are inspected to reject the outliers and finally averaged.

Median stacking is used mainly for masters. It takes the median of each pixel stack. It is very resistant to outliers, at the cost of a lower noise reduction.

Pixel maximum/minimum stacking are, as indicated, use to take the maximum/minimum of each pixel stack.

Tip

For both sum stacking and average stacking with rejection, the shifts written in the sequence file are used if any.

No normalization

Additive

Multiplicative

Additive with scaling

Multiplicative with scaling

Extra⚓

When using rejection stacking, we generally use image normalization to make sure their histograms have similar level and spread, which in turn avoids full images from being detected as outliers.

To get a measure of level and spread for each image, Siril uses statistical estimators of location and scale (https://free-astro.org/index.php?title=Siril:Statistics ). A valid estimator of location could be taken as the median while the MAD or the sqrt(BWMV) could be used for scale. However, in order to give more robustness to the measures, the pixels more than 6 x MAD away from the median are discarded. On this clipped dataset, the median and sqrt(BWMV) are re-computed and used as location and scale estimators respectively.

Once the estimators have been computed for each image, the images are rescaled based on the user's choice :

- Additive : Each image is shifted by the difference between its location relative to the reference image location.

- Additive with scaling : In addition to shifting the location, the image is also scaled by the ratio between the reference image scale and its own scale.

- Multiplicative : the image is scaled by the ratio between the reference image location and its own.

- Multiplicative with scaling : in addition to the multiplicative rescaling, an additional rescaling is applied, using the ration between the reference image scale and its own.

For stacking lights, Additive with scaling should be the preferred method.

For stacking flats, Multiplicative should be the preferred method as it normalizes the incoming flux. This is particularly relevant is you have shot sky flats (for which luminosity varies as the sun rises or sets) or for very short exposure flats (for which the incoming flux is very sensitive to the accuracy of the camera shutter speed).

For stacking darks or offsets, always use No Normalization.

By default, Siril takes the estimators to compute normalization coefficients from the sequence file if already computed. However you can force Siril to ignore that and recompute them before stacking.

Warning

Computing these estimators is a demanding process in terms of computational time, so use this feature only if you think it is necessary.

Rejection is the process of inspecting each pixel stack and determine if some values are outliers wrt to the other values of the set.

Let's take a simple example, imagine one of your images contains a plane or satellite track. For all the pixels along this track, their values would be abnormally high compared to the same pixel values for all the other images of your stack. Rejection is the process of detecting that for this particular image, for all the pixels along the track, the values do not "fit" with the normal population and should not be accounted for.

Percentile Clipping is a one-step rejection algorithm ideal for small sets of data (up to 6 images).

Sigma Clipping is an iterative algorithm which will reject pixels whose distance from median will be farthest than two given values in sigma units.

MAD Clipping is an iterative algorithm working as Sigma Clipping except that the estimator used is the Median Absolute Deviation (MAD). This is generally used for noisy infrared image processing.

Median Sigma Clipping is the same algorithm except than the rejected pixels are replaced by the median value.

Winsorized Sigma Clipping is very similar to Sigma Clipping method but it uses an algorithm based on Huber's work[2] .

Linear Fit Clipping is an algorithm developed by Juan Conejero, main developer of PixInsight[3] . It fits the best straight line (y=ax+b) of the pixel stack and rejects outliers. This algorithm performs very well with large stack and images containing sky gradients with differing spatial distributions and orientations.

The Generalized Extreme Studentized Deviate Test algorithm[4] is a generalization of Grubbs Test that is used to detect one or more outliers in a univariate data set that follows an approximately normal distribution. This algorithm shows excellent performances with large dataset of more 50 images.

Rejection parameters are used to specify how aggressive the rejection process should be :

For Percentile clipping, the low and high values specify the percentile below/above which the values should be rejected. Increasing the low value or decreasing the high value increase the rejection rate (more pixels get rejected).

For all other clipping methods, the sigma values specifiy the number of standard deviations that are used to clip the distributions. Therefore increasing these values decreases the rejection rate (less pixels get rejected).

For Generalized Extreme Studentized Deviate Test, the ESD outliers value specify the max percentage of the stack that can be rejected, while the ESD significance specify the thresold to detect outliers. Increase those values will increase rejection rate (more pixels get rejected).

When checked, this option weights each image of the stack, giving more importance to frames with lower background noise.

all the images of the sequence

images previously manually selected from the sequence

best images, filtered from the registration data (star-based methods), either FWHM, weighted FWHM or roundness

best images,filtered from the registration data (planetary methods)

Extra⚓

You can combine multiple filters to remove images based on different criteria (add more criteria by pressing the '+' sign on the right) :

- FWHM is the mean of stars Full Width at Half Maximum for each image. The smaller the better.

- weighted FWHM is a mixture of both FWHM, number of stars detected and number of star matched between each image and the reference image. A high value of wFWHM would indicate an image with clouds or with a limited overlap with the reference image (or both).

- roundness is the mean roundness for all the stars in each image. Values closest to 1 are an indication of round stars. Images with smaller roundness usually have distorted star, usually caused by guiding errors.

Start stacking the current sequence of images, using the method and other information provided above.